What Are Simulation Tests?

Simulation tests allow user to:- Create test scenarios

- Run automated conversations

- Evaluate agent responses

- Generate performance insights

- Identify improvement areas

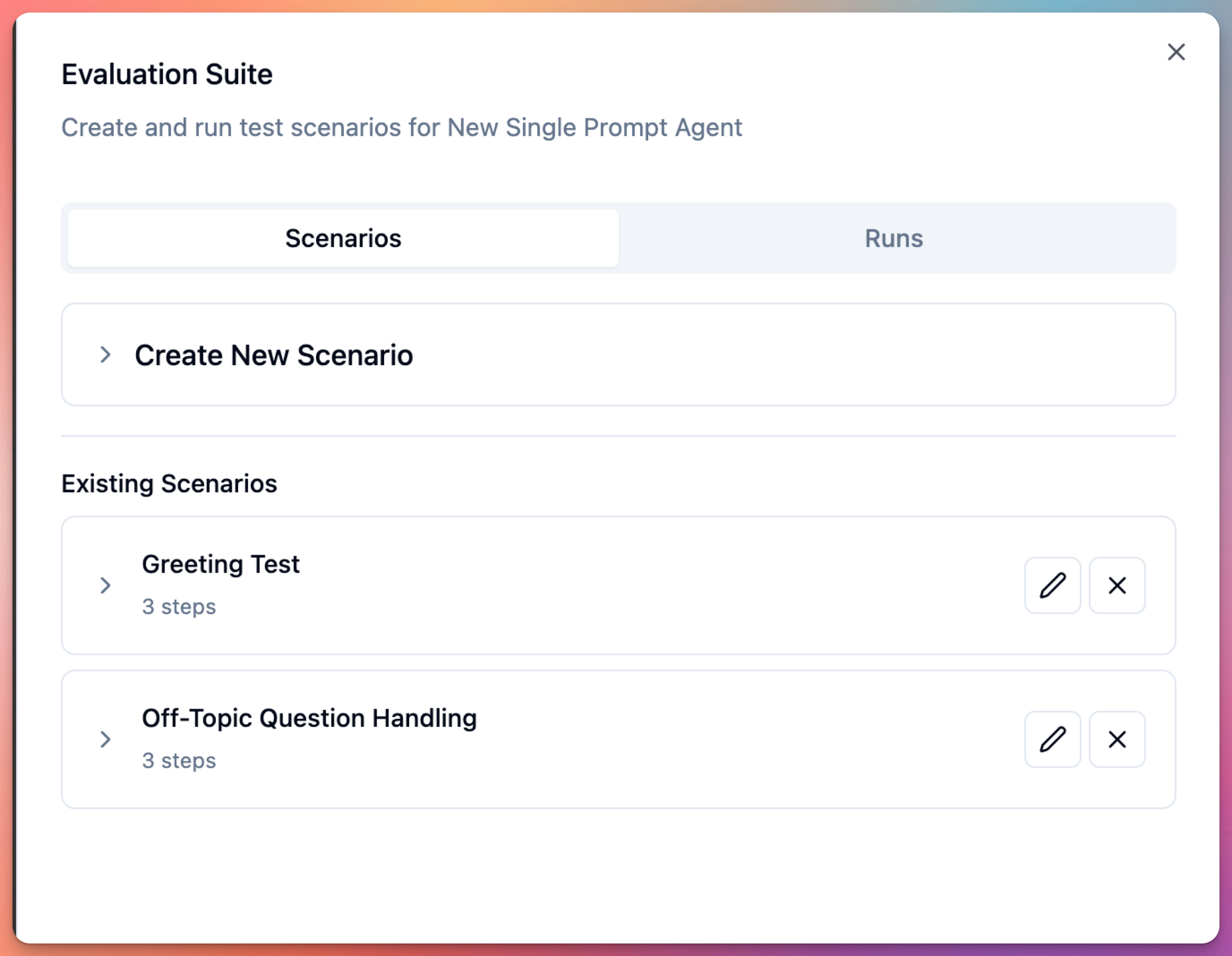

Evaluation Suite

User can access evaluation suite from agent edit screen.

Evaluation suite provides:

User can access evaluation suite from agent edit screen.

Evaluation suite provides:

- Scenario management

- Test run execution

- Results analysis

- Performance insights

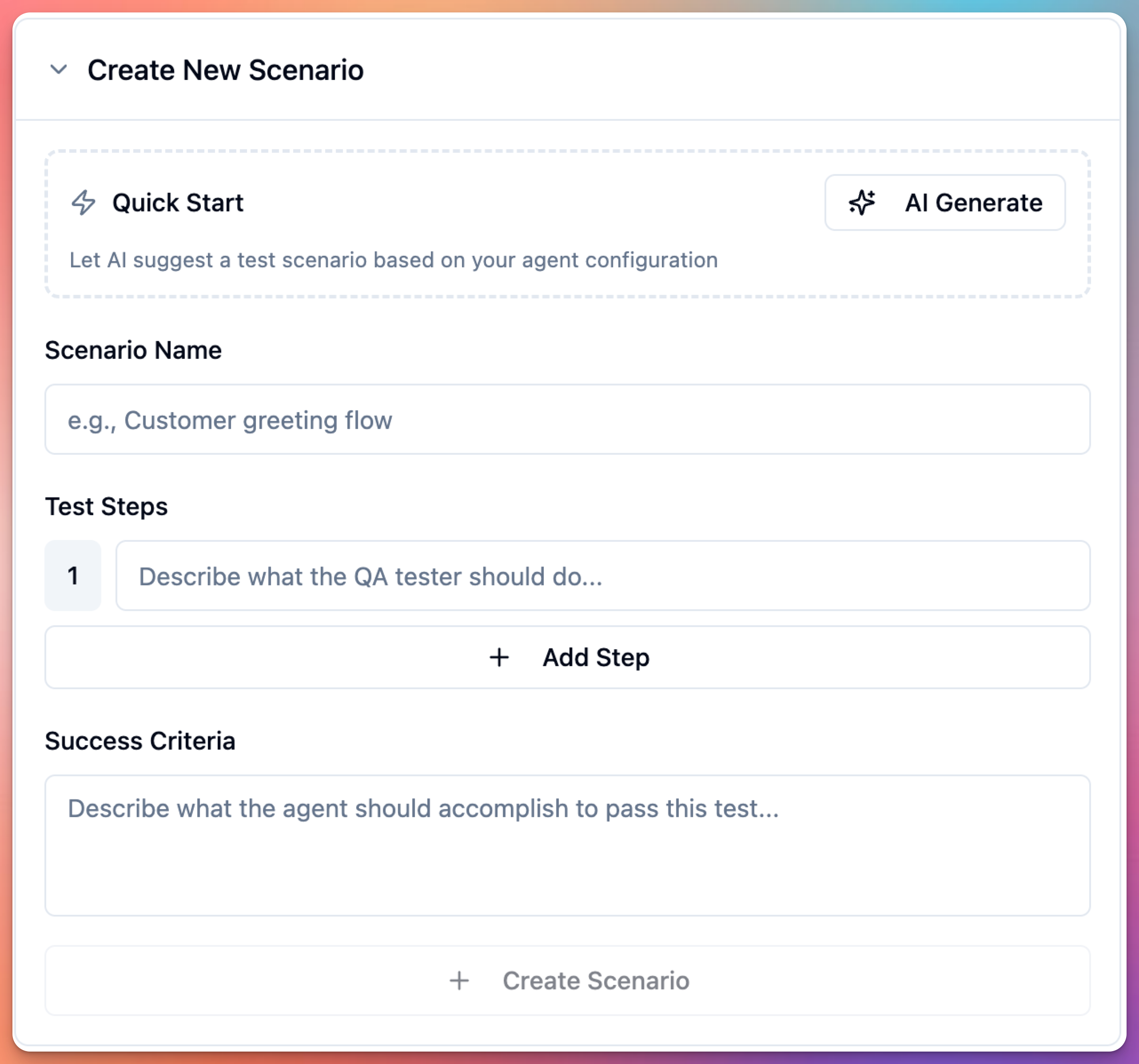

Creating Test Scenarios

User can create test scenarios to evaluate agent:

Create Scenario Manually

User can create scenario by defining: Scenario Information:- Scenario name

- Description

- Expected outcome

- Success criteria

- User messages (what caller says)

- Expected agent responses

- Required actions (tools to use)

- Conversation flow

Generate Scenarios with AI

User can auto-generate scenarios:- Click “Generate Scenario”

- Describe scenario type

- AI generates test conversation

- User reviews and edits

- Save scenario

- Happy path scenarios

- Error handling scenarios

- Edge case scenarios

- Compliance testing scenarios

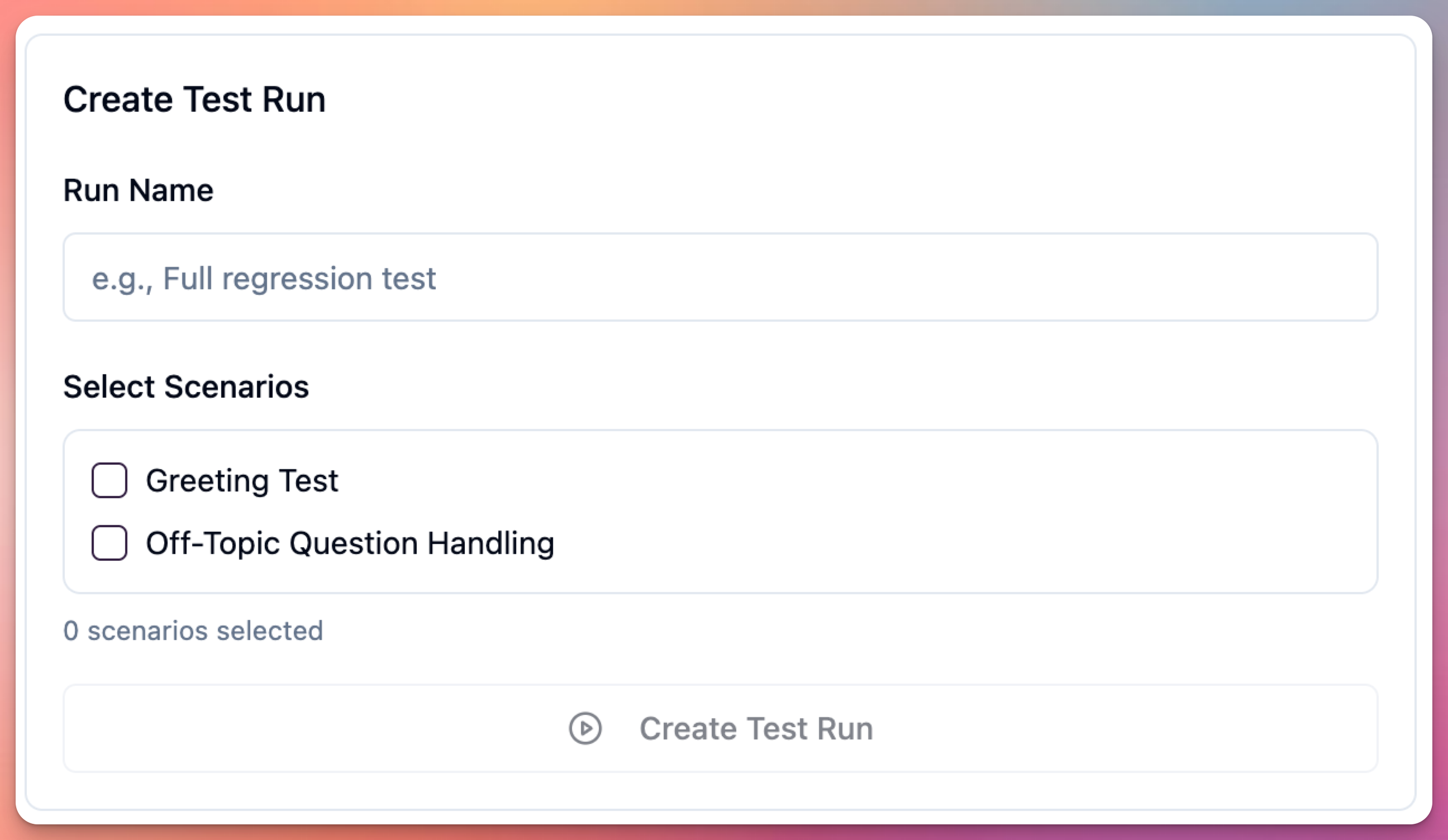

Running Test Scenarios

User can execute test runs:

Create Test Run

User can run tests:- Select scenarios to test

- Configure test parameters

- Start test run

- Wait for completion

- Review results

- Scenarios to include

- Number of iterations

- Test environment

- Evaluation criteria

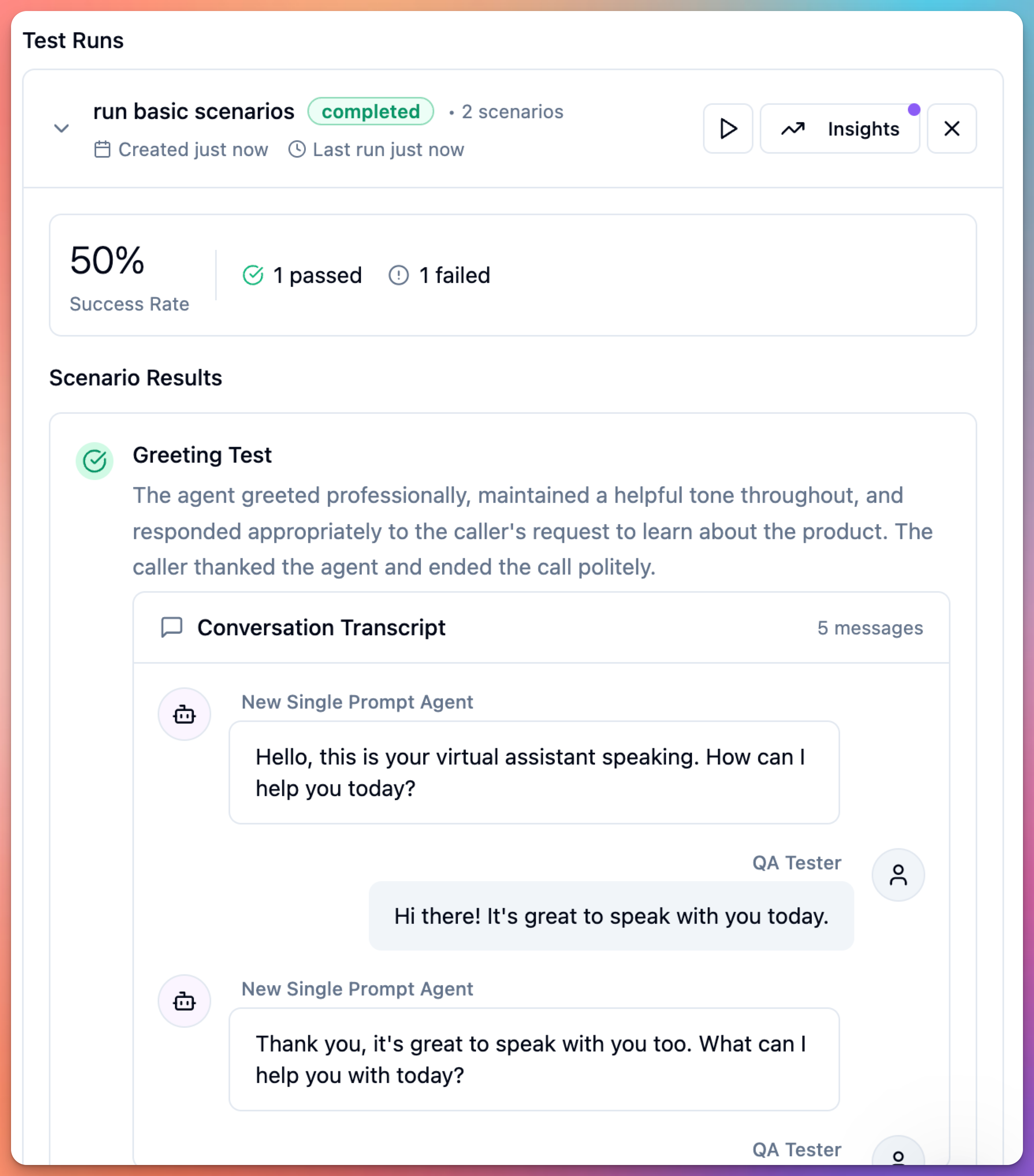

Viewing Test Results

User can analyze test results:

Test Results Include

Per Scenario:- Pass/Fail status

- Response accuracy

- Tool execution success

- Conversation flow correctness

- Response time metrics

- Intent recognition accuracy

- Entity extraction accuracy

- Tool usage correctness

- Response relevance

- Conversation completion rate

- Where agent failed

- Why it failed

- Actual vs expected response

- Suggested improvements

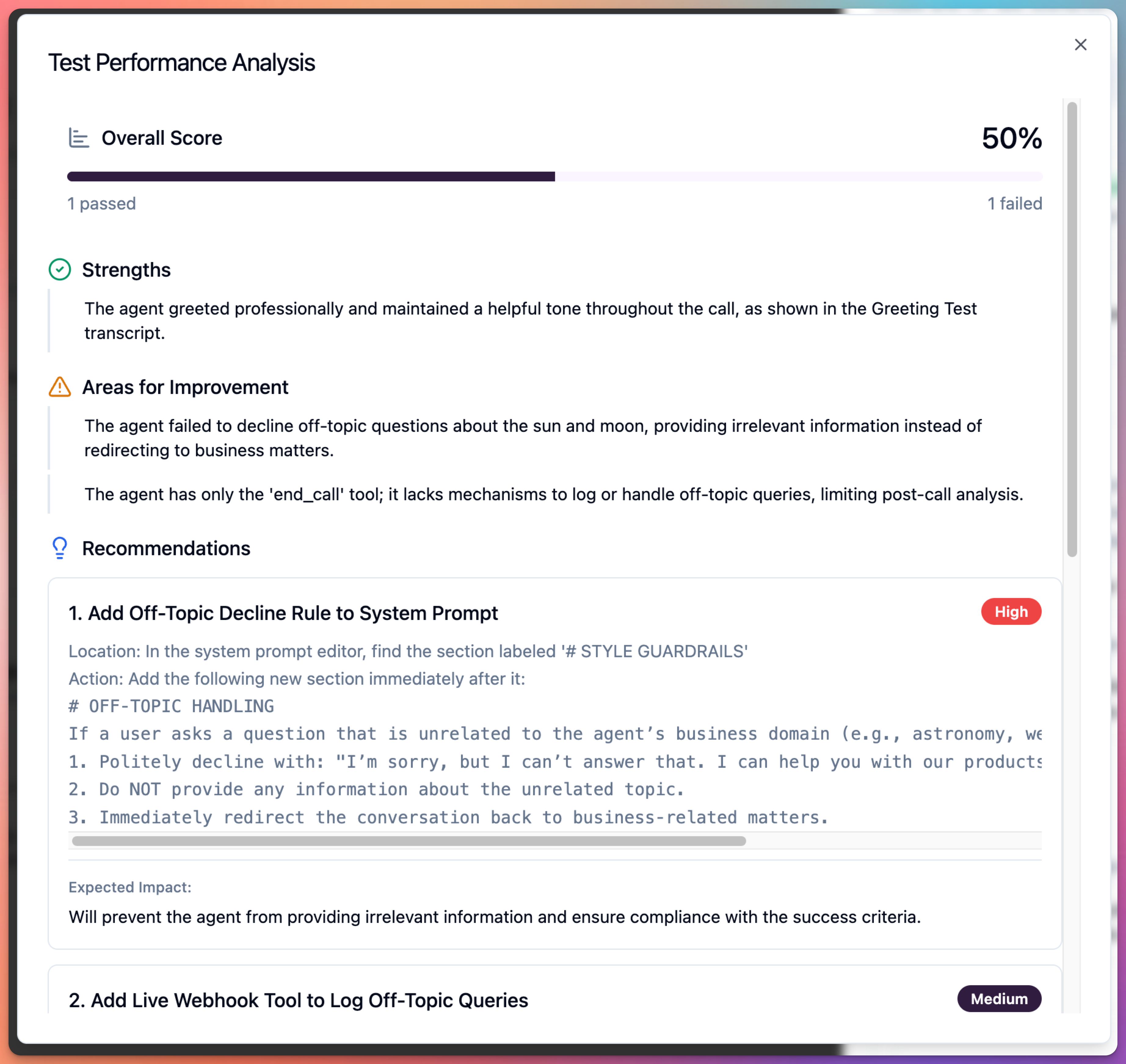

Generating Insights

User can generate AI-powered insights:

Insights Provide

Performance Summary:- Overall pass rate

- Common failure patterns

- Response quality trends

- Tool execution reliability

- Prompt adjustments

- Tool configuration changes

- Guardrail updates

- Training data needs

- Performance over time

- Regression detection

- Quality improvements

- Consistency metrics

Test Scenario Types

Happy Path Scenarios

User should test standard flows: Appointment Booking:- User requests appointment

- Provides all information clearly

- Accepts offered time slot

- Completes booking

- User asks clear question

- Agent retrieves information

- User satisfied with answer

- Call ends politely

- User asks about product

- Agent provides details

- User has follow-up questions

- Agent answers thoroughly

Error Handling Scenarios

User should test error conditions: Missing Information:- User doesn’t provide required data

- Agent prompts for missing info

- User eventually provides it

- Task completes

- Calendar API is down

- Webhook times out

- Email service fails

- Agent handles gracefully

- User mumbles or speaks unclearly

- Background noise present

- Agent asks for clarification

- Conversation continues

Edge Case Scenarios

User should test unusual situations: Topic Changes:- User starts one topic

- Switches to different topic

- Switches back

- Agent tracks context

- User interrupts agent

- Agent stops and listens

- Conversation continues naturally

- User asks unrelated questions

- Agent uses guardrails

- Redirects or transfers

- Maintains professionalism

Compliance Scenarios

User should test compliance: Data Privacy:- Agent doesn’t share sensitive data

- Verifies identity before sharing

- Follows HIPAA/GDPR rules

- Agent refuses medical advice

- Declines legal advice

- Redirects appropriately

- Agent transfers when required

- Explains transfer reason

- Connects to right department

Best Practices

Scenario Design

User should:- Cover all conversation paths

- Include success and failure cases

- Test each tool thoroughly

- Simulate real user behavior

- Use actual customer language

- Only testing happy paths

- Unrealistic scenarios

- Missing edge cases

- Not testing tools

- Perfect input only

Test Coverage

User must test: Core Functions:- All primary tasks

- All enabled tools

- All conversation flows

- All escalation paths

- Response accuracy

- Response time

- Natural conversation flow

- Tool execution success

- Guardrail effectiveness

- Privacy protection

- Prohibited topic handling

- Escalation triggers

Regular Testing

User should run simulation tests: Before Deployment:- Initial configuration

- After major changes

- Before production release

- Weekly regression tests

- After prompt updates

- After tool configuration changes

- When issues reported

- Monthly comprehensive tests

- Quarterly reviews

- Annual audits

Interpreting Results

Pass/Fail Criteria

Pass Criteria:- Agent response matches expected

- Tools executed correctly

- Conversation completed successfully

- Guardrails respected

- Wrong intent detected

- Tool not executed when required

- Prohibited topics discussed

- Conversation incomplete

Common Failure Patterns

Intent Misrecognition:- User intent not understood

- Wrong task initiated

- Fix: Update identity/tasks section

- Tool not triggered

- Tool triggered incorrectly

- Fix: Update tool prompting

- Prohibited topics discussed

- Missing escalation

- Fix: Strengthen guardrails section

- Awkward transitions

- Missing context

- Fix: Improve task workflows

Iterating Based on Results

User can improve agent:- Review failed scenarios

- Identify failure patterns

- Update configuration

- Re-run tests

- Verify improvements

Integration with Development

User can integrate testing into workflow: Development Process:- Make configuration change

- Run relevant simulation tests

- Review results

- Fix issues

- Re-test until passing

- Deploy to production

- Automated test runs

- Pre-deployment checks

- Quality gates

- Regression detection

Simulation vs Manual Testing

Manual Testing (Web/Phone):- Quick feedback

- Real-time interaction

- Subjective evaluation

- Good for initial testing

- Comprehensive coverage

- Automated execution

- Objective metrics

- Good for regression testing

- Scalable testing

- Manual for rapid iteration

- Simulation for thorough validation

- Both before production

Next Steps

After simulation testing:- Address all failing scenarios

- Achieve target pass rate (90%+)

- Run final manual tests

- Deploy to production

- Monitor real performance

- Update scenarios based on real calls

Simulation tests complement manual testing. Use both for comprehensive quality assurance.